Big Data Analytics, The Cloud, Internet of Thing: The buzz words in the ICT (Information and Communications Technology) industry. All of which fuels the flourishing Data Centers market across the globe at an alarming rate. Cisco predicted that by 2018, more than three quarters (78%) of workloads will be processed by cloud data centers; 22 percent will be processed by traditional data centers. Although overall data center workloads will nearly double (1.9-fold) from 2013 to 2018; however, cloud workloads will nearly triple (2.9-fold) over the same period. The workload density (that is, workloads per physical server) for cloud data centers was 5.2 in 2013 and will grow to 7.5 by 2018.

Great opportunities, comes with great challenges. When asked, Data Center operator ‘What is the most challenging in the running of the Data Center?’ it is no doubt all will answer that it is the cost associated with the Data Center’s HVAC (Humidity, Ventilation and Air Conditioning) System. In many Data Centers, annual cost associated to HVAC will run into millions of dollars and with the rising cost of energy, that will be the single most important item many Data Center operators like to see reduced. But why is HVAC so costly in Data Centers? Data centers have historically used precision cooling to tightly control the environment inside the data center within strict limits around the clock ant this is based upon practices that dates back to the 1950s. However, rising energy costs coupled with impending carbon taxation are causing many organisations to re-examine data center energy efficiency and the assumptions driving their existing data center practices. Hence the question, “Does Data Centers need to be so cold all the time?”

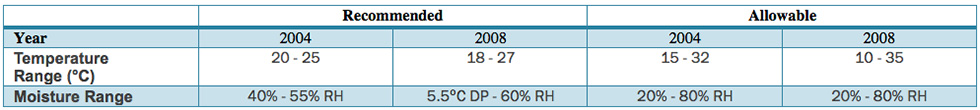

Over time, the IT industry has worked on new innovations to widen the acceptable thermal and humidity ranges. It is widely assumed that operating at temperatures higher than typical working conditions can have a negative impact on the reliability of electronics and electrical systems. However, the effect of operating environment conditions on the reliability and lifespan of IT systems has been poorly understood by operators and IT users. The use of a looser environmental envelope will lead to a potential reduction in some of the operational costs associated with a data center’s HVAC systems. In particular, data centers that do not require a mechanical chiller based cooling plant and rely on economizers can prove significantly less expensive both to construct and operate. One such method is the use of natural air cooling technology known as ‘Airside Cooling’. How big is the impact on the saving? Well Gartner Vice President, David Cappuccio estimates that raising the temperature 1°F can save about 3% in energy costs on a monthly basis. What is the ideal temperature without compromising on reliability? How hot can we drive the devices to work without increasing the chances of failure? To provide direction for the IT and data center facilities industry, the American Society of Heating, Refrigerating and Air-Conditioning Engineers (ASHRAE) introduced its first guidance document on recommended Humidity and Temperature levels in 2004 and in 2008, this paper was revised to reflect new agreed-upon ranges, which are shown in Table 1

table-1

Table 1 – ASHRAE Guideline

In its guidance, ASHRAE defined two operational ranges: “Recommended” and “Allowable.” Operating in the Recommended range can provide maximum device reliability and lifespan, while minimizing device energy consumption, insofar as the ambient thermal and humidity conditions impact these factors. The Allowable range permits operation of IT equipment at wider tolerances, while accepting some ‘potential reliability risks’ due to electro-static discharge (ESD), corrosion, or temperature-induced failures and while balancing the potential for increased IT power consumption as a result. Nevertheless, in the industry, many vendors support temperature and humidity ranges that are wider than the ASHRAE 2008 Allowable range. It is important to note that the ASHRAE 2008 guidance represents only the agreed-upon intersection between vendors, which enables multiple vendors’ equipment to effectively run in the same data center under a single operating regime. In 2011, ASHRAE once again updated its guidance to define two additional classes of operation in Data Centers, providing vendors and users with operating definitions that have higher Allowable temperature boundaries for operation of up to 40°C and 45°C respectively.

One of the companies to focus its attention on higher operating temperature is Google. Erik Teetzel, an Energy Program Manager at Google said, “The guidance we give to data center operators is to raise the thermostat. Pushing the boundaries of the allowable working temperature, Google has been operating their Belgium Data Center since 2008 without any chiller and uses natural airside cooling where at certain periods in the year, the temperature could high up to 95°F or 35°C. Too hot for the people but the machines continues to work just fine. Studies by Intel and Microsoft have shown that most servers do fine with higher temperatures and outside air, easing fears about higher hardware failure rates. In fact, Dell recently said it would warranty its servers to operate in environments as warm as 45°C (115°F) which is a full 20°C higher than the operating temperature recommended by ASHRAE. Hence the global adoption of higher operating temperatures in Data Centers is inevitable.

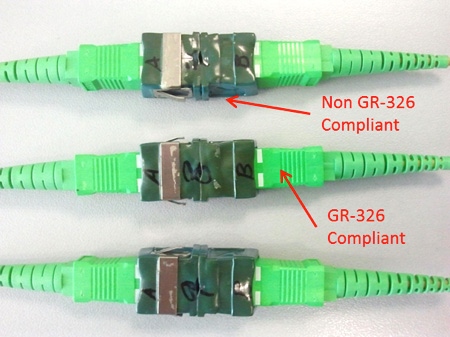

Figure 3 – Sub-standard Material unable to withstand environmental testing

How will the passive infrastructures in Data Centers fair? Is the new higher operating temperature a threat to the reliability as well? Fortunately, operating temperature of 45°C is no stranger to passive infrastructure and reputable components suppliers have always been testing at high operating temperatures with accordance to international standards alike the IEC 61753-1 & Telcordia GR-326-CORE. In some testing regime, product reliability are being pushed to the extreme by subjecting samples to accelerated thermal aging to emulate service lifetime of approximately 20 years which is a sensible lifetime for passive infrastructure since unlike active equipment that has a service lifetime of approximately 5 years (and some even shorter before it is being replaced by a more updated version), passive components such as jumper cables, trunk cables, connectors and adapters and also cable trunking and conduits are not replaced as frequently. In some cases, the passive infrastructure has been there since the day the Data Center begins its operations and it is only being replaced because it does not meet the required performance specification and not due to component failure alike Figure 3. In essence, the adoption of higher operating temperatures in Data Centers is inevitable, we just have to ensure that what we design and install today is ready to meet these new challenges.